General Information

Vercel has announced V0.dev for developers and designers to generate JSX code with Tailwind CSS using artificial intelligence (AI). The only issue with the announcement was that v0.dev had a waiting list and was not available to everyone initially. Now, with a Vercel account, everyone can start using the tool. Using limited tokens, you can generate prompts, views, and components to sped up app development.

Tools of this nature possess the unique capability to serve as a conduit, effectively bridging the divide that often exists between developers and designers. This symbiotic relationship not only facilitates seamless collaboration but also yields substantial time savings for myriad companies during the initiation phases of their projects and product launches. Now, let us delve into the practical realm to observe the performance of this innovative AI tool firsthand.

V0.dev functions as a digital instrument akin to ChatGPT, centering its operations exclusively on the generation of user interface code, thereby emphasizing its utility in crafting web components. It adeptly harnesses the functionalities of both the shadcn/ui and Tailwind CSS libraries to facilitate the creation of views and corresponding code structures, thereby enhancing the efficiency and versatility of the development process.

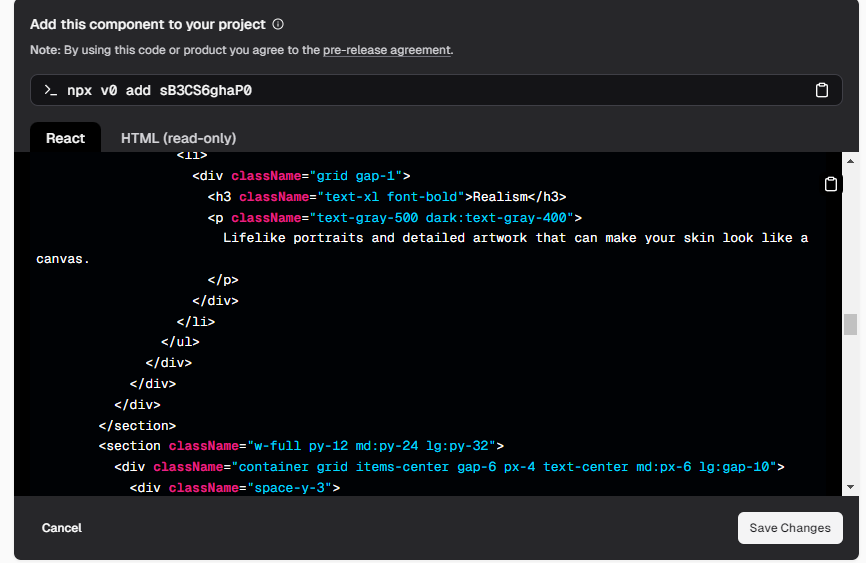

After generating the code, you can easily add a component to our application using the npx command.

Test Scenarios

- Does it provide production-ready code?

- Does it understand the images we provide?

- Can it be used in an existing project?

- Can it be used for new projects?

First experiment – Store product view

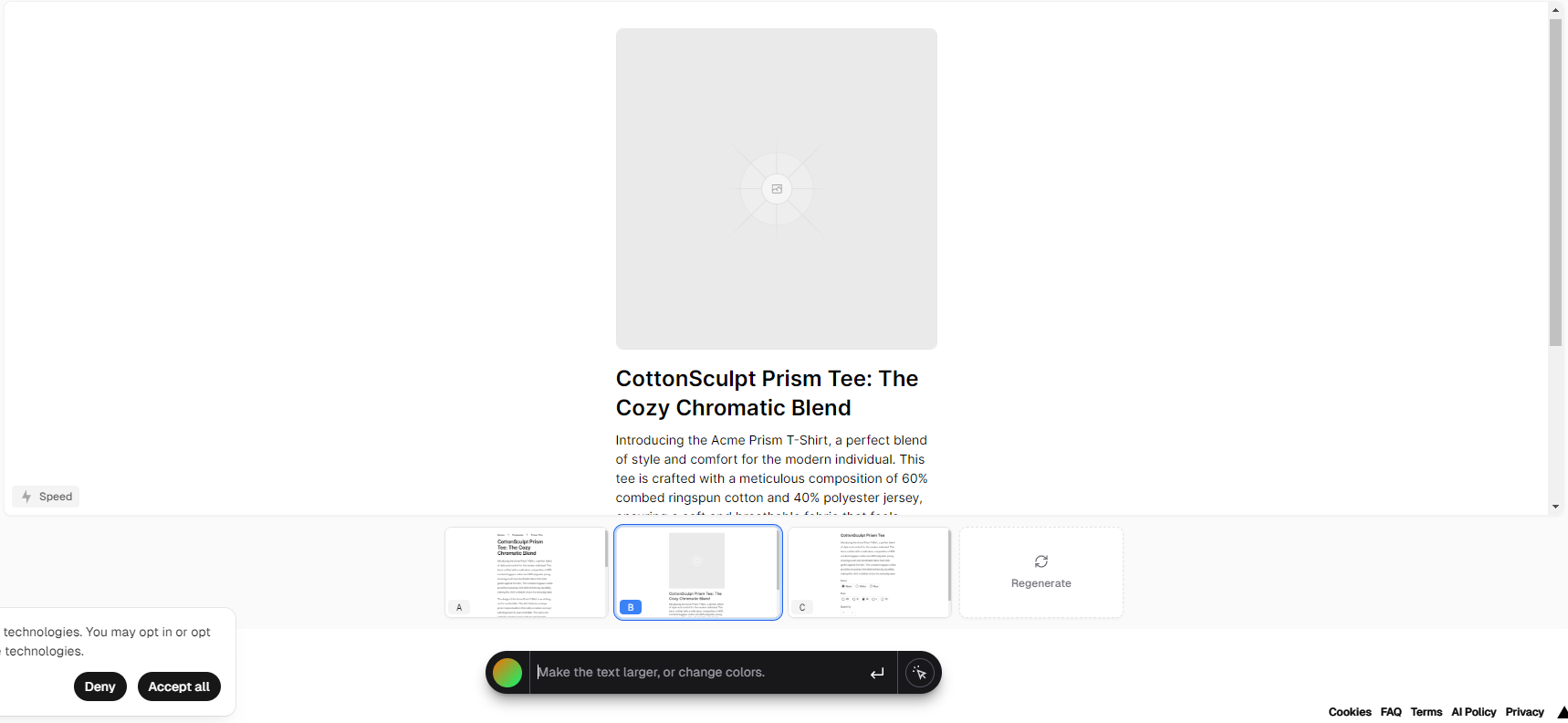

The first experiment was to create a product preview view. The prompt I tested was “mobile view for a product page with image, title, description, stock quantity, add to cart button, and social share buttons in the style of Airbnb.”

Following the input of the prompt and a brief period of anticipation, V0.dev promptly furnished three outcomes, each accompanied by interactive previews showcasing the views alongside corresponding code snippets for inspection and copying.

I was pleasantly surprised to find that the component generated by the AI appeared quite impressive at first glance, despite minor discrepancies present in each rendition. Nonetheless, these discrepancies can easily be addressed through manual integration. From a visual standpoint, it’s definitely a positive outcome.

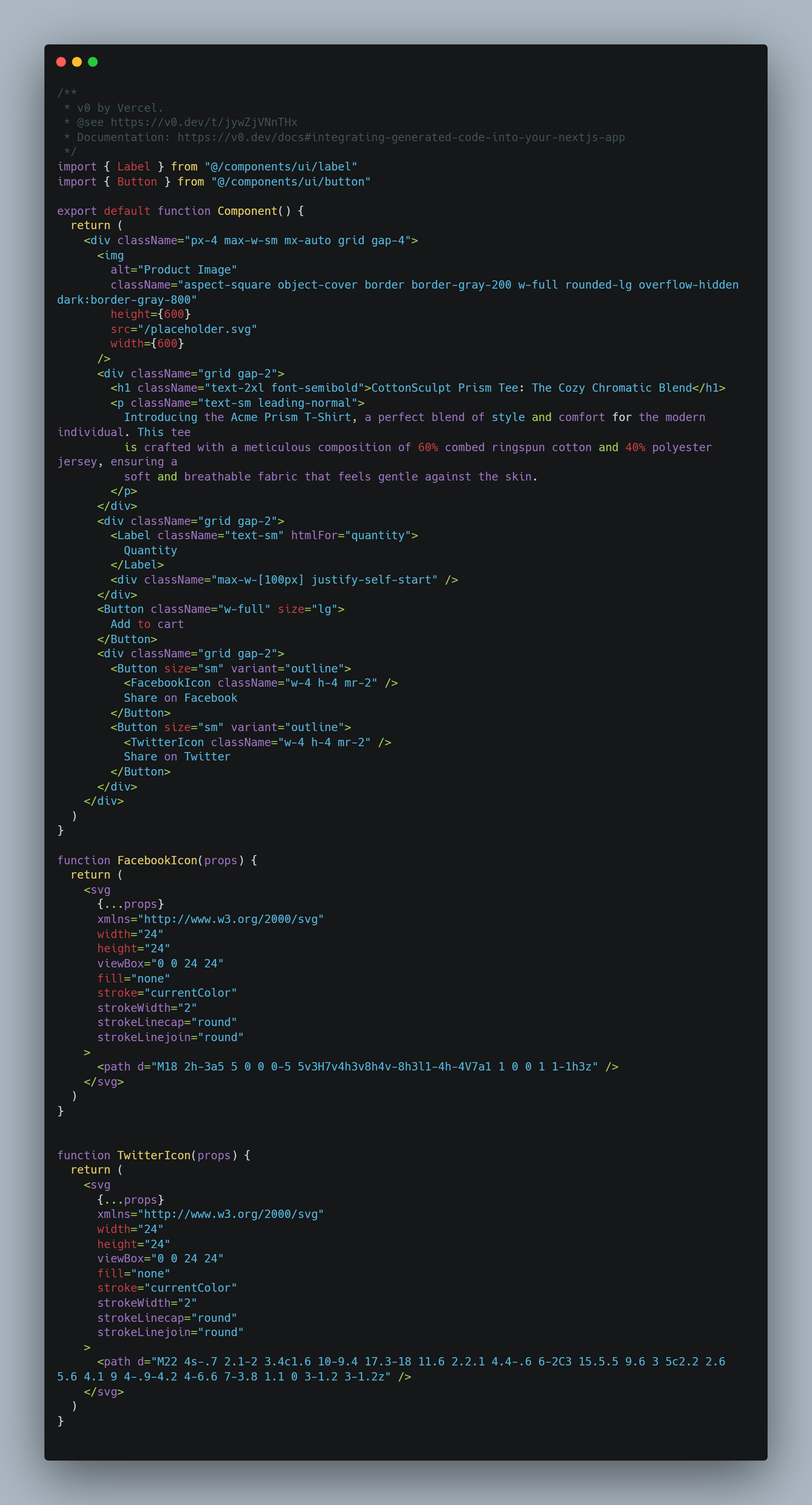

Transitioning to the coding aspect, here’s what we’ve got:

I would prefer a component that is divided into individual sections/elements. This would improve readability. Moreover, I appreciate the fact that it generated SVG icons as separate components, adding to its versatility.

Second Experiment – One-Page Website

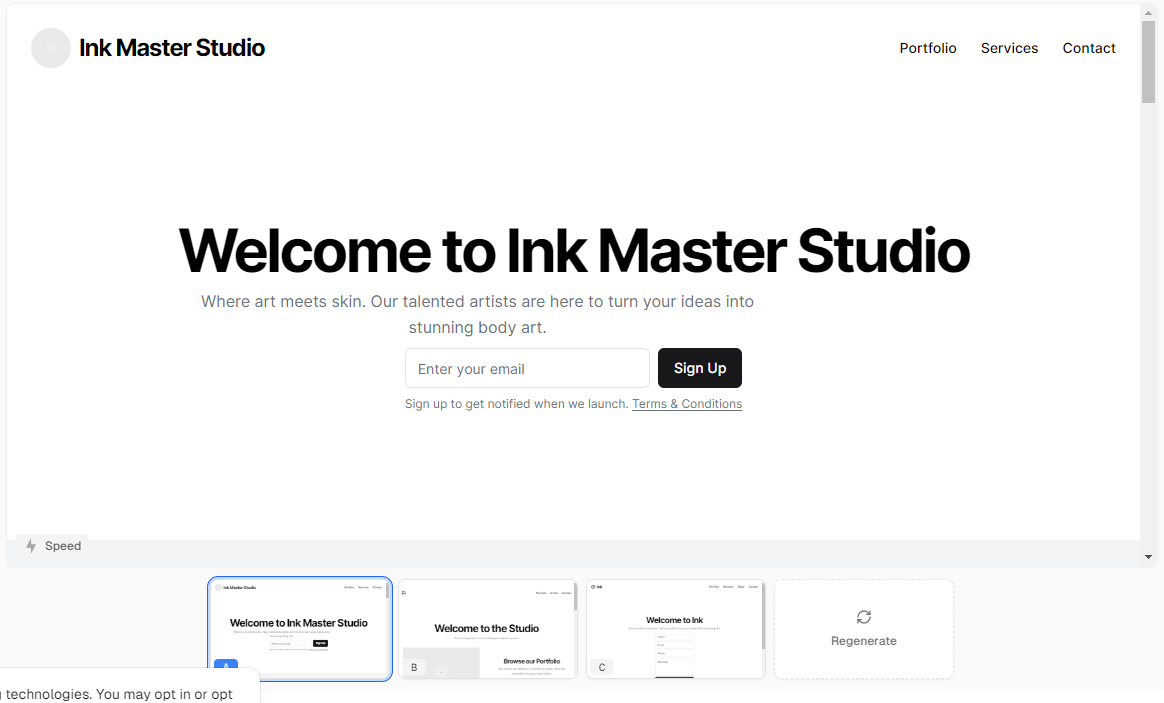

The prompt used was “one-page simple modern website for a tattoo studio.”

For this iteration, v0.dev went the extra mile, generating a complete one-page website complete with animated navigation, a user-friendly contact form, and multiple sections situated beneath the primary viewport. Visually speaking, it’s quite presentable, although some manual tweaks would be necessary to fine-tune certain elements.

In the case of larger generated components, the code looks significantly worse.

There is no division into components, the code repeats itself, and there are large nestings. With larger components, v0.dev starts to produce spaghetti code. However, if such code were to be passed to ChatGPT and asked for a refactor and division into components, something good might come out of it.

Another plus is responsiveness. v0.dev automatically includes elements to be responsive by assigning them classes such as md: lg:, etc.

V0.dev also uses ready-made components from the shadcdn/ui library, so all generated views remain in the same style. This has its pros and cons.

Third experiment – UI based on image

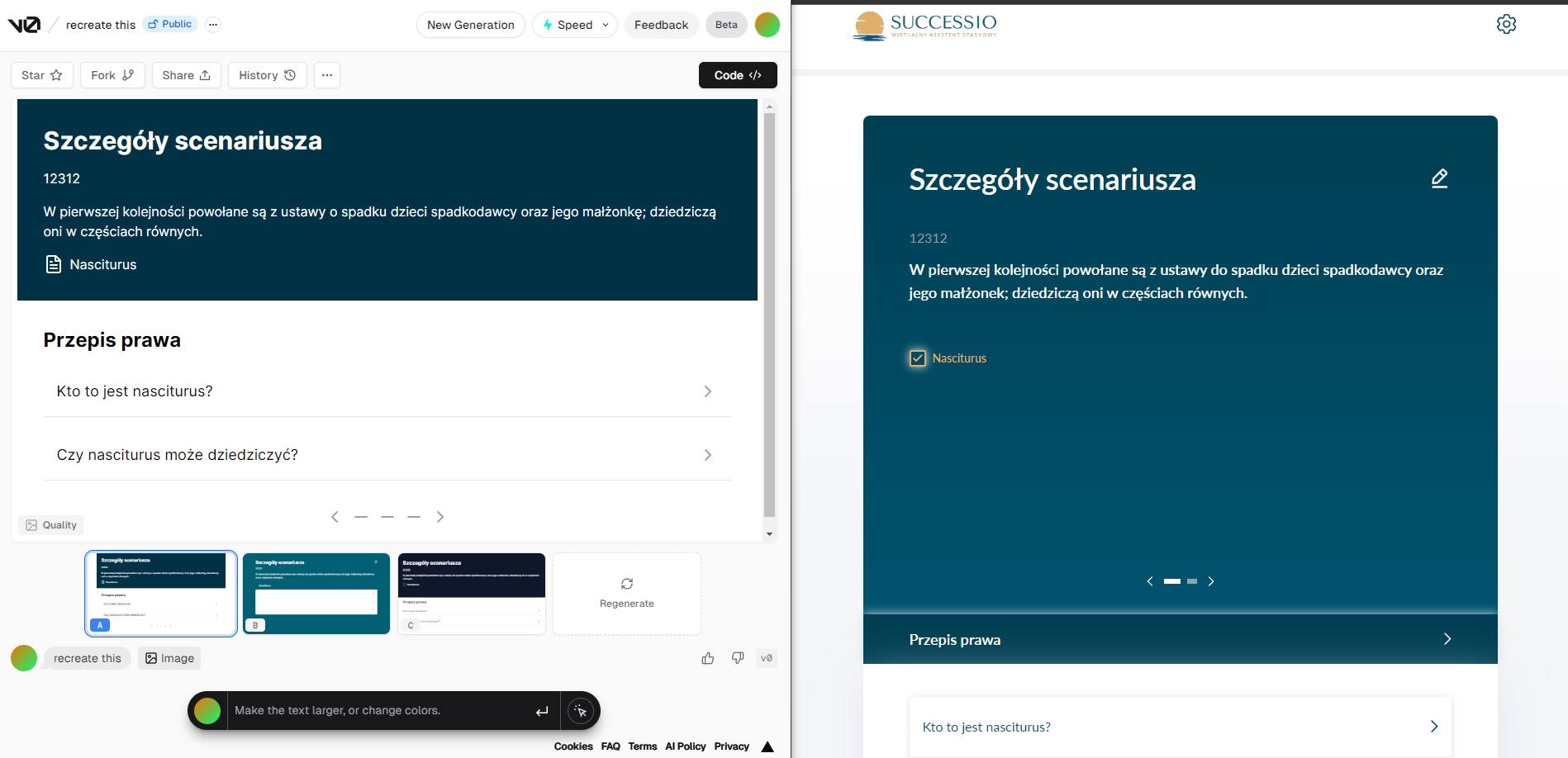

The last experiment was to test how creating UI based on an image works. I used a screen from the application we have developed. The prompt used was “recreate this.”

The outcomes vary; despite numerous prompt resets, I failed to obtain a satisfactory result. Nevertheless, for prototyping purposes, I consider v0.dev as a valuable tool with significant potential to become an integral part of our daily workflow in the future.

Summary:

Does it provide production-ready code?

No. The code provided by V0 requires adjustments, refactoring, and applying the “DRY” principle. Without this, after copying several components, it may turn out that we’ve created spaghetti code. Additionally, V0 uses the shadcdn/ui component library, and often in projects, we have our own design pattern, so this is another issue to consider. The exception might be simple components, where elements from the library can be easily replaced.

Does it understand the images we provide?

Yes, but the generated result usually needs many adjustments, which would take more time than recreating it manually. Even with simple images, V0 can rearrange the order, layout, or do something completely different.

Can it be used in an existing project?

I think using V0 in an existing project would be a challenging task because there is currently no way to present the graphic context of our design to this AI, and it relies on library components. Graphic adjustments to the generated results could take a lot of time or mess up our code.

Can it be used for new projects?

It seems to me that the best use of V0 would be for new projects and early stages of application development, for example, when creating an MVP or in projects where design is not significant, and it’s better to use an already defined design pattern, allowing us to create part of the UI quickly and easily.