Why Do Own LLMs Matter?

Running your own LLM serves various purposes and offers numerous benefits:

- Tailored Solutions: Custom LLMs can be trained to address specific tasks or domains, providing tailored solutions to unique challenges.

- Enhanced Performance: By fine-tuning the model on relevant datasets, users can achieve higher accuracy and better performance for their specific use cases.

- Improved Efficiency: Own LLMs enable automation of tasks such as text generation, summarization, sentiment analysis, and more, leading to increased efficiency and productivity.

- Personalization: LLMs can be utilized to personalize user experiences, such as generating personalized recommendations, responses, or content based on individual preferences and behavior.

- Domain Expertise: Organizations can leverage own LLMs to encode domain-specific knowledge and insights, facilitating better decision-making and problem-solving within their respective fields.

- Data Privacy: Hosting your LLM allows you to maintain control over sensitive data, ensuring compliance with privacy regulations and safeguarding proprietary information.

- Innovation and Research: Researchers and developers can utilize own LLMs to explore new NLP techniques, develop novel applications, and contribute to advancements in the field of artificial intelligence.

Overall, running your own LLM empowers you to harness the full potential of natural language processing technology, driving innovation, efficiency, and personalization across various industries and applications.

Democratizing Access to LLMs: The Rise of the Ollama Project

Source: https://ollama.com

In recent years, the field of artificial intelligence (AI) has witnessed remarkable advancements, particularly in natural language processing (NLP). One of the most significant developments has been the creation of large language models (LLMs) such as OpenAI’s GPT series, which have demonstrated impressive capabilities in understanding and generating human-like text. However, harnessing the power of these models for specific tasks often requires considerable computational resources and expertise in machine learning. This barrier to entry has limited access to the benefits of LLMs for many individuals and organizations. Enter the Ollama project, a groundbreaking initiative aimed at simplifying the process of running your own LLM model.

Understanding the Ollama Project

The Ollama project emerged from a collaborative effort to democratize access to LLM technology. It offers a user-friendly platform that streamlines the deployment and management of custom LLMs, empowering users to leverage state-of-the-art natural language understanding and generation capabilities without the need for extensive technical knowledge or infrastructure.

At its core, the Ollama project provides a comprehensive framework for training, fine-tuning, and deploying LLMs tailored to specific applications or domains. Whether you’re a researcher exploring novel NLP techniques, a developer integrating language capabilities into your software, or a business seeking to enhance customer interactions, Ollama offers a versatile solution to meet your needs.

Simplifying the Process

One of the key advantages of the Ollama project is its emphasis on simplicity. Traditionally, training an LLM from scratch requires expertise in machine learning, access to powerful hardware, and significant computational resources. However, Ollama abstracts away much of this complexity, offering an intuitive interface and automated workflows that guide users through the process step by step.

Launching Your LLM Journey: Simple Installation Steps

To embark on the journey of harnessing the power of large language models (LLMs) through the Ollama project, the first step is simple: visit Ollama.com. Upon arrival, you’re greeted with a straightforward invitation. The website offers a gateway to a world of linguistic exploration and innovation. Whether you’re interested in running Llama 2, Code Llama, or other customizable models, Ollama provides the tools and resources to make it happen.

At the heart of the Ollama experience lies the download button, beckoning users to begin their adventure. With just a click, users gain access to a wealth of possibilities for customizing and creating their own LLMs. The platform caters to users across different operating systems, including macOS, Linux, and Windows (preview), ensuring inclusivity and accessibility for all.

Installation is a breeze, requiring only a moment of your time. Whether you’re on macOS, Windows, or Linux, Ollama guides you through the process with ease. With a few simple clicks, you’ll be ready to delve into the world of large language models, unleashing the potential for creativity, innovation, and discovery.

Streamlined Deployment with Docker: Ollama Goes Containerized

Source: https://ollama.com

Ollama now offers an official Docker image, simplifying the setup within Docker containers. This initiative ensures local interactions with models without compromising data privacy. For Mac users, Ollama supports GPU acceleration and provides a user-friendly CLI and REST API. Linux users with Nvidia GPUs can also leverage Docker for GPU-accelerated execution. With Ollama in Docker, running models like Llama 2 is seamless, and additional models are readily available in the Ollama library. For more information, visit Ollama’s official blog.

Exploring Ollama’s Diverse Model Library

Ollama offers a vast array of models catering to various needs, and the collection continues to expand. Some of the most popular models available on Ollama include:

1. Gemma: Lightweight models by Google DeepMind.

2. Llama 2: Foundation models ranging from 7B to 70B parameters.

3. Mistral: A 7B model by Mistral AI, updated regularly.

4. Mixtral: High-quality Mixture of Experts (MoE) models by Mistral AI.

5. LLaVA: A multimodal model combining vision and language understanding.

6. Neural-chat: A fine-tuned model with good domain and language coverage.

7. CodeLlama: A model specialized in generating and discussing code.

8. Dolphin-Mixtral: An uncensored model excelling at coding tasks.

9. Qwen: A series of models by Alibaba Cloud with a wide parameter range.

10. Orca-Mini: A general-purpose model suitable for entry-level hardware.

And many more. Ollama’s library encompasses models for various tasks such as coding, chat, scientific discussions, and more. The platform ensures users have access to the latest advancements in natural language processing, offering limitless opportunities for exploration and innovation. As the demand for specialized models grows, Ollama continues to add new models, ensuring users have the tools they need to tackle diverse challenges effectively.

Running One of the Popular Models: Mistral

For testing purposes, we’ll utilize the Mistral model, renowned for its impressive performance across various benchmarks. Mistral, available in the 7B version, often outshines competitors such as Llama 2 7B and even Llama 2 13B in terms of average performance.

In the realm of large language models, the term “B” refers to billions of parameters, indicating the scale and complexity of the model. As the parameter count increases, so do the computational demands and the model’s potential capabilities. Therefore, higher “B” models tend to exhibit enhanced performance but require more significant computational resources for training and inference.

Despite being a 7B model, Mistral consistently demonstrates superior performance compared to its counterparts. This distinction underscores the importance of benchmarking models across various metrics to identify the most suitable option for specific tasks. As the demand for more advanced models grows, so too do the requirements for computational resources, highlighting the need for efficient infrastructure and optimization techniques to support large-scale model deployment.

By leveraging Mistral for testing purposes, we aim to gain insights into its performance and capabilities, shedding light on its suitability for a wide range of natural language processing tasks. Through rigorous evaluation and comparison with other models, we can assess Mistral’s strengths and weaknesses, enabling informed decision-making when selecting models for real-world applications.

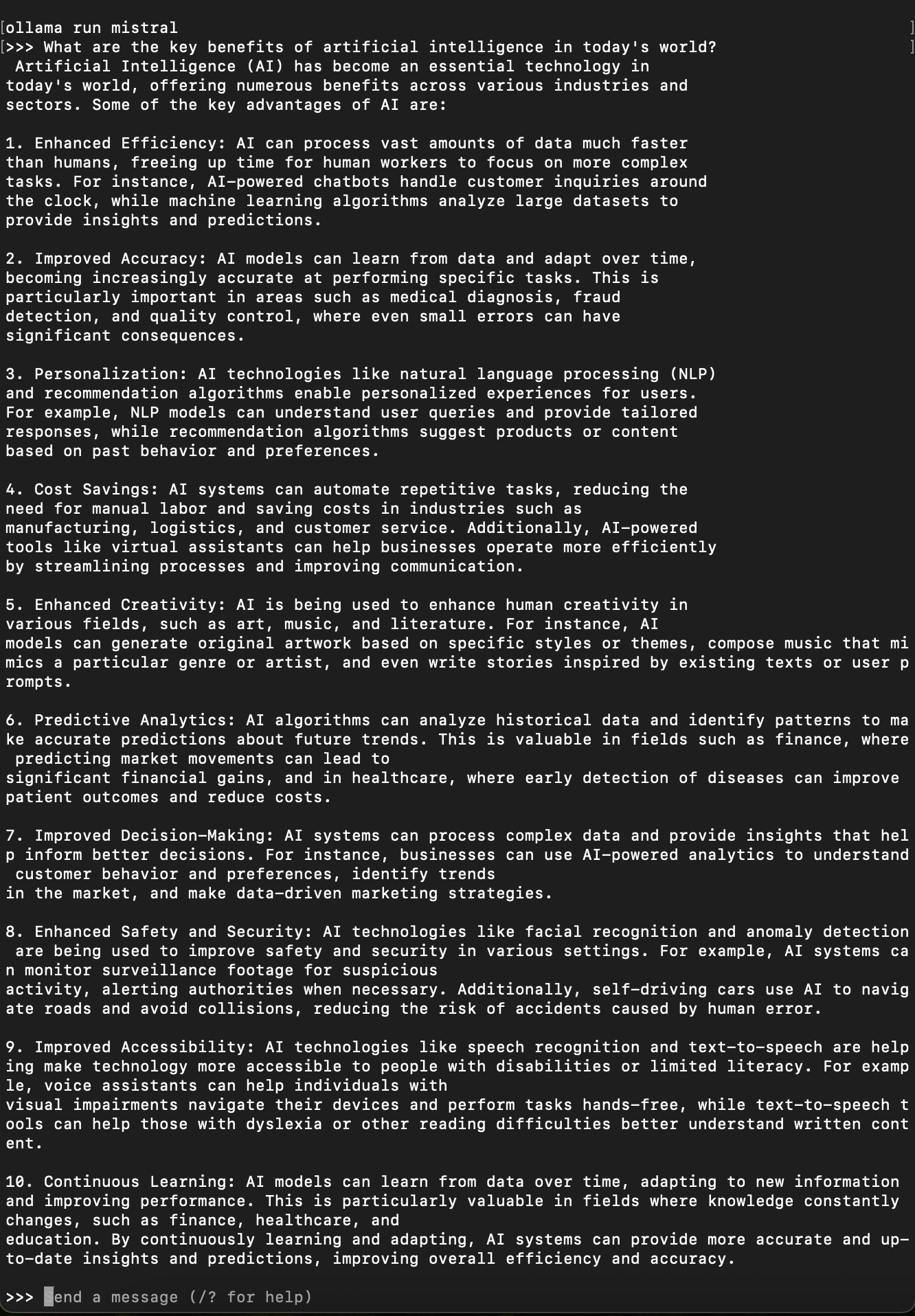

To initiate the deployment of the Mistral model or any other desired model, the process is remarkably straightforward. Simply navigate to the terminal and enter the command: “ollama run mistral” (or replace “mistral” with the name of any other model).

Upon executing this command for the first time, the model download process will commence automatically. In the case of Mistral, for instance, the initial download size might be substantial, approximately 4.1GB. This download ensures that you have access to the requisite model parameters and configurations for seamless utilization during your natural language processing tasks.

During the model deployment process, we’ll ask the following question: “What are the key benefits of artificial intelligence in today’s world?” This inquiry will serve as a test to evaluate the model’s ability to generate coherent, accurate, and informative responses across various topics.

We’ve successfully executed our first query to the model, obtaining a response effortlessly. While the comprehensiveness of the answer may require further evaluation, it’s important to note that this is one of the simplest models available. As we continue to explore its capabilities. Ollama also provides a convenient REST API, allowing users to input prompts and select models to receive responses effortlessly. For more updates and insights, we encourage readers to stay tuned to the GoodSoft blog.